By Martina Raue

Make a thumbs up, extend your arm all the way, close one eye, and see if you can hide the animal with your thumb. This rule of thumb is suggested by the organization Leave No Trace to help children to judge the safe distance from a wild animal. Rules of thumb that make complex decisions a little easier have been termed heuristics in the scientific literature. Making decisions can be overwhelming when there is too much information or too little time. We can consciously process only a limited amount of information at a time, a characteristic which Herbert Simon termed bounded rationality. As a result, people simplify decisions by using heuristics. Heuristics are simple decision rules that allow judgments of acceptable accuracy without integrating all the information available. They play an important role in daily judgments and decision-making processes. For example, we can safely cross a street without accurately analyzing the speed of approaching cars. Children learn quickly how to do this long before taking their first physics class. However, the use of heuristics may also lead us astray and leave us prone to biases. In fact, a debate is ongoing over whether heuristics primarily serve to help us or harm us.

Heuristics and Biases

The first scholars to systematically study heuristics were psychologists Amos Tversky and Daniel Kahneman in their heuristics and biases program. Tversky and Kahneman wondered how people come up quickly with intuitive answers to complex questions. In numerous studies, they demonstrated that a basic mechanism of most heuristics is the substitution of an easy question for a more complex one. For example, if asked about the likelihood of a terrorist attack, some people may answer based on how easily they can think of a recent terrorist attack, which is known as the availability heuristic. If asked about the likelihood that a given person is a potential criminal, some people may answer based on subjective associations with the person’s looks or nationality, which is known as the representativeness heuristic. The heuristics and biases program has inspired researchers in psychology and economics by showing how people simplify decisions, but especially because it demonstrated how human judgment can be biased and result in systematic errors. These insights resulted in behavioral interventions known as nudges, which respond to biases and structure choices in a way that makes it easier for people to make better decisions. The use of nudges has been promoted as a non-regulatory and cost-efficient policy instrument. For example, automatic enrollment in a savings plan is a nudge that responds to inertia and present bias – which often lead to procrastinating retirement planning.

Fast and Frugal Heuristics

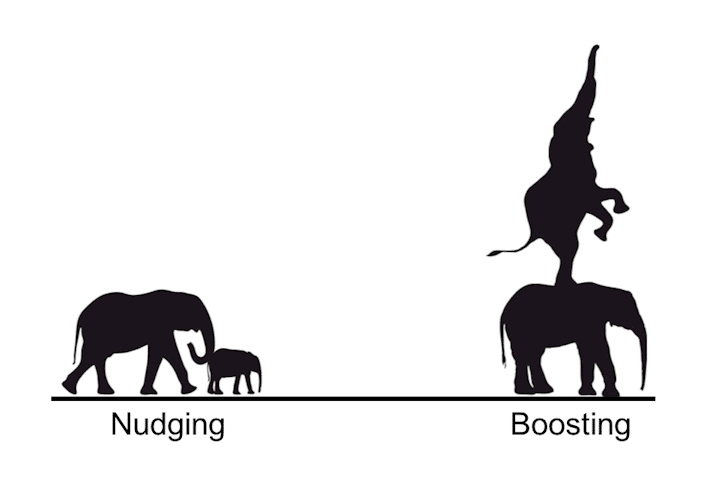

Another group of researchers has emphasized the advantages of using heuristics in situations of uncertainty rather than the potential biases resulting from their use. Gerd Gigerenzer and his colleagues investigate fast and frugal heuristics, which are simple rules that do not necessarily sacrifice accuracy. They describe decisions as ecologically rational when they reduce effort and increase accuracy by matching the mind’s capacities with the current environment. Fast and frugal heuristics can sometimes outperform complex algorithms in real-world situations. A typical example is the gaze heuristic, which describes how a person or a dog can ably catch a ball or a frisbee without analyzing all the factors that affect the object’s trajectory. Similar to the advocates of nudges, fast and frugal researchers call for designing environments in ways that trigger successful heuristic strategies. However, they suggest environments that support informed decision-making without steering people in a certain direction, as nudges do. As an alternative to nudges, Till Grüne-Yanoff and Ralph Hertwig introduced boosts, which are based on fast and frugal heuristics and aim at expanding (boosting) people’s decision-making competences by supporting them to apply their existing skills and tools more effectively. A boost of statistical understanding to make informed medical decisions may include the presentation of statistical information in frequencies rather than probabilities. Boosts in the form of decision-trees have been quite successful in medicine, for example, to support informed decision-making of both patients and providers.

The Debate

Heuristics are often portrayed as a deviation from optimal reasoning, yet can also be viewed as the optimal human strategy to reduce complexity in a given situation. A public discussion between Gigerenzer and Kahneman and Tversky in the 1990s was the beginning of this debate, which is still ongoing. In the 2016 Behavioral Economics Guide, Gerd Gigerenzer congratulates Daniel Kahneman and Amos Tversky for promoting heuristics in psychology, before lamenting their linking of heuristics to systematic errors and biases, which has led to the wrong assumption, in his opinion, that humans are irrational, lack accuracy, or are simply not very smart. Daniel Kahneman, however, has repeatedly stated that he and Amos Tversky never intended to show that human choices are irrational, but rather that human beings are not well-described as purely rational agents. Ultimately, both groups of researchers agree that heuristics generally work well, and that in many cases a good-enough or satisfying outcome is sufficient and an optimal outcome not feasible. It is unfortunate that the two approaches to heuristics are still seen and act as opposing parties.

Nudges vs. Boosts

Image based on a presentation by Ralph Hertwig (2018) at the German Rector’s Conference.

Uncertainty, time constraints, and incomplete knowledge are all parts of everyone’s daily life; consequently, heuristics are useful tools we all rely on, often with success and sometimes with failure. The two approaches that study heuristics do not have to be conflicting, as both schools offer important insights into human decision-making and valuable tools to support decisions under uncertainty. In a recent article in Perspective on Psychological Science, Ralph Hertwig and Till Grüne-Yanoff congratulate Richard Thaler and Cass Sunstein for drawing attention to the importance of behavioral science in policy by introducing nudges. The authors acknowledge that some nudges such as labels and reminders are educative in nature and, thus, are similar to what the authors term short-term boosts. That is, both offer additional information in a way that is easy to understand and follow, but may not equip people with a new competency (as long-term boosts do). As opposed to boosts and educative nudges, traditional nudges, such as defaults, do not require cognitive engagement or motivation from the decision-maker. While nudges may be effective policy instruments, there should be room for more approaches, as Hertwig and Grüne-Yanoff argue.

Consider, for example, a complex issue such as climate change. Protecting the environment is a task for the government and the people. In order to engage people in pro-environmental behavior, successful interventions need to consider human decision-making in situations of uncertainty. Suggested interventions in the form of nudges include green defaults (e.g., automatic enrollment in green energy programs), the re-framing of information (e.g., highlighting social benefits rather than personal sacrifice), conveying social norms (e.g., comparing one’s energy consumption to the neighbors’), and using eco-labels. Eco-labels may count as a boost as well if they target the consumers’ competence and boosts their understanding of pro-environmental features of the product. Communicating risks around climate change in a way that is easily understandable, such as by using simple graphs, could be a way to boost pro-environmental intentions. These interventions would make it easier for people to behave pro-environmentally despite their uncertainty – and tackle the larger issue from different angles. The distinction between nudges and boosts seems not to be clear for many, however. In the 2017 Behavioral Economics Guide, Cass Sunstein points out that people sometimes make mistakes and in such cases an improvement in choice architecture might help. He also acknowledges, however, that these improvements might take the form of a boost. Rather than making a clear distinction between the approaches, it may be practically more useful to integrate them in one framework (for example, based on their level of education or information).

Conclusion

Both nudges and boosts are powerful tools based on empirical behavioral science that may improve and support decision-making. How could these and other approaches be incorporated into one useful framework? While the discussion on how to use these tools to support decisions and change behavior has already begun (see, for example, Hertwig’s overview of rules for using boosts over nudges in policy), the wider community of researchers and practitioners in behavioral economics should engage in integrating both views and finding empirical support for their use in various contexts, as discussions often remain on the theoretical level. A clear framework that integrates various approaches to support decision-making may offer choice architects a repertoire of valuable tools depending on the setting, goals, and the characteristics of the decision-maker.

Further Readings

Kelman, M. (2011). The Heuristics Debate. New York, NY: Oxford University Press.

Raue, M., & Scholl, S. G. (2018). The Use of Heuristics in Decision-Making under Risk and Uncertainty. In M. Raue, E. Lermer, & B. Streicher (Eds.), Psychological Perspectives on Risk and Risk Analysis – Theory, Models and Applications. New York, NY: Springer.