By Alice Solda

Why Overconfidence?

People make decisions based on their perceptions of their own abilities. The outcome of those decisions depends – in part at least – on being able to accurately evaluate our abilities. Most standard theories in economics and psychology assume that people collect and process information in a way that gives them a relatively accurate perception of reality. However, empirical evidence has shown that this assumption may often not be warranted.

Overconfidence – the overestimation of one’s ability – is one of the most widely documented biases related to belief formation. There is abundant evidence that individuals are overconfident in various domains: people believe they are more attractive, smarter, and better drivers than they actually are. While overconfidence can sometimes be attributed to mere mistakes, a growing body of evidence suggests that this bias can be strategic instead.

Overconfidence as a Strategy

Overconfidence is said to be “strategic” when it emerges in situations in which it provides an advantage. Data from both economics and psychology have shown the existence of some benefits associated with being overconfident: it provides psychological benefits (such as enhanced self-esteem and mental health) and motivates individuals to undertake difficult projects. However, from an evolutionary perspective, it is hard to imagine that evolution would favor a trait that only provides psychological benefits while having actual material costs.

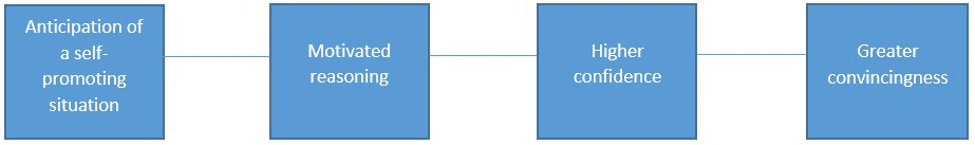

A recent strand of research now proposes instead that overconfidence is advantageous when deceiving others. Deception – the act of causing someone to believe something that is untrue – can provide access to resources that are difficult to obtain honestly and hence is frequently observed in daily life. However, lying imposes cognitive loads on the deceiver. When lying, the deceiver has to maintain two versions of the story in his mind (the reality and the fiction), which requires effort. Hence, deceivers may emit behavioral cues of deception, leading to substantial costs (punishment, for example) if the deception is detected. William von Hippel and Robert Trivers argued that self-deception may decrease the probability that a lie is detected by removing the cognitive costs associated with lying and thereby hiding cues of deception and. In a nutshell, by deceiving themselves about their own abilities or characteristics, overconfident individuals are more successful at deceiving others.

In line with this idea, studies in psychology have shown that overconfident individuals achieve higher social status in groups and become more popular over time. Building on these results, recent papers have investigated whether overconfidence emerges primarily when it provides such advantages. Results from laboratory experiments show that this is the case. Gary Charness and co-authors show that people were more likely to state higher levels of confidence when doing so would deter a competitor from entering a tournament. More recently, Peter Schwardmann and Joel van der Weele showed that individuals who expected to be interviewed were more overconfident about their abilities than individuals who did not and, in turn, were more successful at convincing interviewers that they were competent.

Our experimental results are also in line with this idea of strategic overconfidence. We found that participants who are given a persuasion-oriented goal (convincing a group of peers that they performed well at a task) are more overconfident than participants who are given an accuracy-oriented goal (estimating their performance at a task as accurately as possible). In addition, we found that individuals who are more overconfident are more successful at convincing their peers that they performed well at the task.

How do People Self-Deceive?

An additional dimension of our paper compared to the previously mentioned studies is that we seek to understand the role of biased information processing in the formation of people’s beliefs about their performance. In our experiments, participants are exposed to three different types of feedback about their performance at the task. One third of the participants received no information about their performance, one third of the participants received a fairly representative sample of information about their performance, and one third of the participants were allowed to gather information themselves about their performance.

In the first condition, participants can only rely on their perception of how well they performed during the task. In the second condition, participants can engage in biased encoding processes in order to form positively biased beliefs about their performance. For example, previous studies have shown that even when unwanted information is encoded, people can favor evidence that they are motivated to believe by putting more weight on good news than bad news. In the third condition, participants have even more room to become overconfident, as they can prevent unwelcome information from being encoded in the first place by actively avoiding sources of information that may hold bad news. They can also choose to allocate their attention differently between positive and unfavorable information.

Our experimental results provide evidence that the degree to which people self-deceive depends on their mental wiggle room. We found that participants who were asked to convince others that they performed well selectively searched for information in a way that was conducive to receiving good information about their performance. By oversampling their answers to easier questions, they were able to provide themselves with more positive feedback.

Why does it Matter?

Through the years, overconfidence has been blamed for major world-changing events such as wars, financial crises and climate change. These events have had disastrous consequences around the world and highlight the importance of understanding overconfidence to better prevent future mistakes.

Our findings enhance our understanding of the situational determinants of overconfidence in social interactions. Overconfidence is most likely to arise in competitive situations in which persuasion plays an important role in accessing valuable resources and underlying ability is not easily assessed. This possibility has implications for economic theory, especially the handling of beliefs in theoretical models. Most standard theories assume that individuals are Bayesian-rational, meaning that people collect and process information in a way that gives them a relatively accurate perception of reality. If, instead, beliefs are ‘chosen’, this Bayesian assumption is violated, or at least must be altered to weight preferences along with prior beliefs.

Second, our findings provide an explanation for the persistence of overconfidence in social interactions, despite its costs. The existence of strategic benefits generates a demand for inflated beliefs that leads to inefficiencies when individuals’ interests conflict. This insight may help organizations and policy makers identify situations in which overconfidence is likely to be costly for society and lay the foundations for policies to prevent or mitigate its negative effects.